The journal of Basic and Applied

Social Pychology banned the p-value

in 2015, after Trafimow (2014) had explained in an editorial a year earlier that

inferential statistics were no longer required. In the 2014 editorial, Trafimow

notes how: “The null hypothesis

significance procedure has been shown to be logically invalid and to provide

little information about the actual likelihood of either the null or experimental

hypothesis (see Trafimow, 2003; Trafimow & Rice, 2009)”. The goal of

this blog post is to explain why the arguments put forward in Trafimow &

Rice (2009) are incorrect. Their simulations illustrate how meaningless questions provide meaningless answers, but they do not reveal a problem with p-values. Editors can do with their journal as they like - even ban p-values. But if

the simulations upon which such a ban is based are meaningless, the ban itself

becomes meaningless.

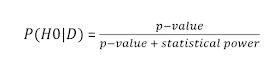

To calculate the probability that the

null-hypothesis is true, given some data we have collected, we need to use Bayes’

formula. Cohen (1994) shows how the posterior probability of the

null-hypothesis, given a statistically

significant result (the data), can be calculated based on a formula that is

a poor man’s Bayesian updating function. Instead of creating distributions

around parameters, his approach simply uses the p-value of a test (which is related to the observed data), the

power of the study, and the prior probability the null-hypothesis is true, to

calculate the posterior probability H0 is true, given the observed data. Before

we look at the formula, some definitions:

P(H0)

is the prior probability (P) the null hypothesis (H0)

is true.

P(H1) is the probability (P) the alternative hypothesis (H1) is true. Since I’ll

be considering only a single alternative hypothesis here, either the null

hypothesis or the alternative hypothesis is true, and thus P(H1) = 1- P(H0). We

will use 1-P(H0) in the formula below.

P(D|H0) is the probability (P) of the data (D), or more extreme data, given that the null hypothesis

(H0) is true. In Cohen’s approach, this is the p-value of a study.

P(D|-H0) is the probability of the data (a significant result), given that H0 is

not true, or when the alternative

hypothesis is true. This is the

statistical power of a study.

P(H0|D) is the probability of the null-hypothesis, given the data. This is our

posterior belief in the null-hypothesis, after the data has been collected.

According to Cohen (1994), it’s what we really want to know. People often

mistake the p-value as the

probability the null-hypothesis is true.

If we ignore the prior probability

for a moment, the formula in Cohen (1994) is simply:

More formally, and including the

prior probabilities, the formula is:

In the numerator, we calculate the

probability that we observed a significant p-value

when the null hypothesis is true, and divide it by the total probability of

finding a significant p-value when either

the null-hypothesis is true or the alternative hypothesis is true. The formula

shows that the lower the p-value in

the numerator, and the higher the power, the lower the probability of the

null-hypothesis, given the significant result you have observed. Both depend on

the same thing: the sample size, and the formula gives an indication why larger

sample sizes mean more informative studies.

How are p

and P(H0|D) related?

Trafimow and Rice (2009) used the

same formula mentioned in Cohen (1994) to calculate P(HO|D) to examine whether p-values drawn from a uniform

distribution between 0 and 1 were linearly correlated with P(H0|D). In their

simulations, the value for the power of the study is also drawn from a uniform

distribution, as is the prior P(H0). Thus, all three variables in the formula

are randomly drawn from a uniform distribution. Trafimow & Rice (2009) provide

an example (I stick to the more commonly used D for the data, where they use F):

“For example, the first

data set contained the random values .540 [p(F|H0)], .712 [p(H0)],

and .185 [p(F|–H0)]. These values were used in Bayes’ formula to derive p(H0|F)

= .880.”

The first part of the R code below

reproduces this example. The remainder of the R script reproduces the

simulations reported by Trafimow and Rice (2009). In the simulation, Trafimow

and Rice (2009) draw p-values from a

uniform distribution. I simulate data when the true effect size is 0, which

also implies p-values are uniformly

distributed.

The correlation between p-values and the probability the

null-hypothesis is true, given the data (P(H0|D) is 0.37. This is a bit lower

than the correlation of 0.396 reported by Tramifow and Rice (2009). The most

likely reason for this is that they used Excel, which has a

faulty random number generator that should not be used for simulations.

Although Trafimow and Rice (2009) say that “The

present authors’ main goal was to test the size of the alleged correlation. To

date, no other researchers have done so” we already find in Kreuger (2001):

“Second, P(D|H0) and P(H0|D) are correlated (r = .38)”

which was also based on a simulation in Excel (Krueger, personal

communication). So, it seems Krueger was the first to examine this correlation,

and the estimate of r = 0.37 is most likely correct. Figure 1 presents a plot

of the simulated data.

It is difficult to

discern the pattern in Figure 1. Based on the low correlation

of 0.37, Trafimow & Rice (2009, p. 266) remark that this result “fails to provide

a compelling justification for computing p values”, and it “does not

constitute a compelling justification for their routine use in social science

research”. But they are wrong.

They also note the correlation only accounts for 16% in the variance between the

relation – which is what you get, when calculating a linear correlation coefficient

for values that are logarithmically related, as we will see below. The only

conclusion we can draw based on this simulation, is that the authors asked a

meaningless question (calculating a linear correlation coefficient), which they

tried to answer with a simulation in which it is impossible to see the pattern they

are actually interested in.

Using a fixed P(H0) and power

The low correlation is

not due to the ‘poorness’ (Trafimow &

Rice, 2009, p. 264) of the relation between

the p-value and P(H0|D), which is, as

I will show below, perfectly predictable, but with their choice to randomly

choose values for the P(D|-H0) and P(H0). If we fix these values (to any value you deem reasonable) we

can see the p-value and P(H0|D) are directly related. In Figure 2, the prior probability of H0 is fixed to 0.1, 0.5,

or 0.9, and the power (P(D|-H0) is also fixed to 0.1, 0.5, or 0.9. These plots show that the p-value and P(H0|D) are directly related and fall on a logarithmic scale.

Lower p-values always mean P(H0|D) is lower,

compared to higher p-values. It’s

important to remember that significant p-values

(left of the vertical red line) don’t necessarily mean that the probability

that H0 is true is less likely than

the probability that H1 is true (see the bottom-left plot, where P(H0|D) is

larger than 0.5 after a significant effect of p = 0.049). The horizontal red lines indicate the prior probability

that the null hypothesis is true. We see that high p-values make the probability that H0 is true more likely (but

sometimes the change in probability is rather unimpressive), and low p-values makes this probability less

likely.

The problem Trafimow

and Rice have identified is not that p-values

are meaningless, but that large simulations where random values are chosen for

the prior probability and the power do not clearly reveal a relationship

between p-values and P(H0|D), and

that quantifying the relation between two variables with the improper linear

term does not explain a lot of variation. Figure 1 consists of many single

points from randomly chosen curves as shown in Figure 2.

No need to ban p-values

This poor man’s Bayesian updating

function lacks some of the finesse a real Bayesian updating procedure has. For

example, it treats a p = 0.049 as an outcome that has a 5% probability of being

observed when there is a true effect. This dichotomous thinking keeps things

simple, but it’s also incorrect, because in a high-powered experiment, a p =

0.049 will rarely be observed (p’s

< 0.01 are massively more likely) and more sensitive updating functions,

such a true Bayesian statistics, will allow you to evaluate outcomes more

precisely. Obviously, any weakness in the poor man’s Bayesian updating formula

also applies to its use to criticize the relation between p-values and the posterior probability the null-hypothesis is true,

as Trafimow and Rice (2009) have done (also see the P.S.).

Significant p-values generally make the null-hypothesis less likely, as long as

the alpha level is chosen sensibly. When the sample size is very large, and the

statistical power is very high, choosing an alpha level of 0.05 can lead to

situations where a p-value smaller

than 0.05 is actually more likely to be observed when the null-hypothesis is

true, than when the alternative hypothesis is true (Lakens & Evers, 2014).

Researchers who have compared true bayesian statistics with p-values acknowledge they will often lead to similar conclusions, but recommend to decrease the alpha level as a function of the sample size (e.g., Cameron

& Trivedi, 2005, p. 279; Good, 1982; Zellner, 1971, p. 304). Some

recommendations have been put forward, but these have not yet been evaluated

extensively. For now, researchers are simply advised to use their own

judgment when setting their alpha level for analyses where sample sizes are

large, or statistical power is very high. Alternatively, researchers might opt

to never draw conclusions about the evidence for or against the

null-hypothesis, and simply aim to control their error rates in lines of

research that test theoretical predictions, following a Neyman-Pearson

perspective on statistical inferences.

If you really want to make statements

about the probability the null-hypothesis is true, given the data, p-values are not the tool of choice

(Bayesian statistics is). But p-values

are related to evidence (Good, 1992), and in exploratory research where priors

are uncertain and the power of the test is unknown, p-values might be the something to fall back on. There is

absolutely no reason to dismiss or even ban them because the ‘poorness’ of the

relation between p-values and the

posterior probability that the null-hypothesis is true. What is needed is a

better understanding of the relationship between p-values and the probability the null-hypothesis is true by educating

all researchers how to correctly interpret p-values.

P.S.

This might be a good moment to note that

Trafimow and Rice (2009) calculate posterior probabilities of p-values assuming the alternative

hypothesis is true, but simulate studies with uniform p-values, meaning that the null-hypothesis is true. This is

somewhat peculiar. Hagen (1997) explains the

correct updating formula under

the assumption that the null-hypothesis is true. He proposes to use exact p-values, when the null hypothesis is

true, but I disagree. When the null-hypothesis is true, every p-value is equally likely, and thus I

would calculate P(H0|D) using:

Assuming H0 and H1 are a-priori

equally likely, the formula simplifies to:

This formula shows how the

null-hypothesis can become more likely when a non-significant result is observed, contrary to popular belief that non-significant findings don't tell you anything about the likelyhood the null-hypothesis is true, not as a function of the p-value you observe (after all they are

uniformly distributed, so every p-value

is equally uninformative), but through Bayes’ formula. The higher the power of

a study, the more likely the null-hypothesis becomes after a non-significant

result.

References

Cameron, A. C. and P. K.

Trivedi (2005). Microeconometrics:

Methods and Applications. New York: Cambridge University Press.

Cohen, J. (1994). The earth is round (p < .05). American Psychologist, 49,

997-1003.

Good, I. J.

(1982). Standardized tail-area probabilities. Journal of

Statistical Computation and Simulation, 16, 65-66.

Good, I. J. (1992). The Bayes/non-Bayes compromise: A

brief review. Journal of the American Statistical Association, 87,

597-606.

Hagen,

R. L. (1997). In praise of the null hypothesis statistical test. American Psychologist, 52, 15-24.

Lakens, D. & Evers, E. (2014). Sailing from the

seas of chaos into the corridor of stability: Practical recommendations to

increase the informational value of studies. Perspectives on Psychological

Science, 9, 278-292. DOI:

10.1177/1745691614528520.

Lew,

M. J. (2013). To P or not to P: on the evidential nature of P-values and their

place in scientific inference. arXiv:1311.0081.

Trafimow D. (2014).

Editorial. Basic and Applied Social

Psychology, 36, 1–2.

Trafimow D., Marks, M.

(2015). Editorial. Basic and Applied

Social Psychology, 37, 1–2.

Trafimow,

D., & Rice, S. (2009). A test of the null hypothesis significance testing

procedure correlation argument. The Journal of General Psychology, 136,

261-270.

Zellner, A. (1971). An introduction to Bayesian

inference in econometrics. New York: John Wiley.

There is a Swedish saying that roughly translates "There is no need to cross the river to fetch some water", meaning that one should take the most straight forward course, and not complicate things. To use p-values as a measure of evidence just feels like "crossing the river" to me, why not go directly for posterior probability? That said, I agree that it is silly to ban them! 3d pie charts are worse than useless, but we don't ban those. :)

ReplyDelete"in exploratory research where priors are uncertain and the power of the test is unknown". Well, priors are a way to represent uncertainty, and sure, you can be uncertain about uncertainty, but better to be explicit about it than using the prior implicit in the p-value procedure, is what I'm thinking. Also, when doing Bayesian data analysis, the power is not that important. Of course it is important to base your conclusions on good data, but once you have collected the data, what power you had is not so important as the actual data at hand.

Hi Rasmus, I say the same thing at the end - "If you really want to make statements about the probability the null-hypothesis is true, given the data, p-values are not the tool of choice (Bayesian statistics is)." Indeed, my main point is the logic used to defend the p-value ban, which is weak. And yes, power is a concept useful when designing studies, not after the data has been collected (although meta-analytic power can have its use).

DeleteThen we are all in happy agreement! :)

DeleteFunny, i published a post on p-values today. However, the best mathematics can not save the p-values from being used incorrectly. I have seen several papers applying wrong methodology and then presenting p-values in order to report statistically significant results. One can do so many things.

ReplyDeletehttp://samuel-zehdenick.blogspot.no/2015/11/why-does-mystical-p-value-sometimes-do.html

First, correlation is a non-parametric concept and I don't see any issue with comparing non-linear vars with it (as long as one doesn't report conf. intervals or p-values for the correlation). Furthermore, T&R are interested in comparing p(H0|D) and p(D|H0) and not in exp(p(H0|D)) or log(p(D|H0)).

ReplyDeleteSecond, similar to conditioning of covariance, conditioning is defined for correlation and is a helpful concept to understand where you disagree with T&R. They report Corr[p(H0|D),p(D|H0)], while you report Corr[p(H0|D),p(D|H0)|p(H0),p(D|-H0)]. There is nothing wrong with any of the two quantities. If one would assume a directed causal relation (e.g. p(p(H0|D)|p(D|H0)), p(p(H0|D)|p(D|H0),p(H0),p(D,-H0)))) the two approaches correspond to computing the direct and total causal effect. So this is just another researchers-report-two-different-quantities-but-give-them-the-same-meaning drama. In particular, you don't offer any arguments for Corr[p(H0|D),p(D|H0)|p(H0),p(D|-H0)] over Corr[p(H0|D),p(D|H0)], just empty assertions like "large simulations where random values are chosen for A and B do not clearly reveal a relationship between C and D". Surely, there is nothing wrong with random sampling and marginalizing of random variables for the purpose of simulation?!!

We can actually compare the plausibility of the two measures by asking whether a reader of a particular scientific publication will be able to estimate them. Corr[p(H0|D),p(D|H0)|p(H0),p(D|-H0)] assumes that p(H0) and p(D|-H0) are known. Is this the case? p(H0) is not known, not as a point value, and p(D|-H0) in many older publications is also not known. Hence, Corr[p(H0|D),p(D|H0)] seems like a much better idea. The issue I have with T&R is that Corr[p(H0|D),p(D|H0)] may change for different choice of prior for p(H0) and p(D|-H0) and this is something that should have been checked.

It was indeed their right to ask the question they asked - but if you read their conclusions, than they go beyond the question they address and draw conclusions not in line with the data - which is my main problem here. Acknowledging that in most common situations, a p < 0.05 is related to a decrease in the probability H0 is true, and oh I don't know, showing that in a plot, would have been nice, which is why I wrote this blog. I think is adds to the discussion - but feel free to disagree.

DeleteI haven't read the paper, but from the passages you quoted, the conclusions of T&R are certainly not supported. Nor do I think that it is possible to obtain any evidence to support a ban of p-values. (Still, I think the ban is a beneficial decision so long as it is implemented by the minority of journals.) On the other hand, your blog doesn't show to me that the relation is "not weak". Instead you unload more of unsupported claims. That's a pity because, as I tried to point out, there are interesting questions behind your disagreement with T&R.

DeleteFeel free to run with my unsupported claims and criticize them. I think this blog is part of a discussion - feel free to right about the next part of the discussion, here, or on your blog.

DeleteThat does not change the fact the the p-value is logically inconsistent and that NHST makes people do bogus science.

ReplyDeleteThe relation between p-values and posterior distributions is context dependent, it does not make sense to claim that "it is not weak enough" because that claim only makes sense in a specific context and it is irrelevant to the ban itself.

This kind of extreme statements about 'logically inconsistent' and 'bogus science' are common in people who discuss statistics with affect instead of logic. I find it very unproductive and ignore it.

DeleteWait what? The p-value *is* logically inconsistent. I don't see how someone pointing that out is "arguing with affect." The bogus science comment is clearly debatable but the p-value's inconsistency isn't.

DeleteP-values are not logically inconsistent when used from a Neyman-Pearson perspective. If you want to use the to formally quantify posterior probabilities, they are not consistent. Their use as a heuristic is an entirely different matter. It does not need to be consistent, and it deserves to be evaluated giving 1) the likelihood people will understand bayesian statistics, and 2) difficulties in using bayesian statistics when the goal is to agree evidence (see the ESP discussions).

DeleteAny meaningful use of a p value as an heuristic for Bayesian posteriors presupposes that we "heuristically" understand its simultaneous dependence on variation (both the biological and technical kinds), effect size, sample size, power, and P(H0). For example, the Cohen (1994) formula silently assumes that P(H0)=0.5, which is not an assumption to be made lightly, as it would indicate that a scientist is exclusively testing rather likely (and thus boring) hypotheses. For this reason alone, this formula is not a good heuristic for most scientists, whose priors tend to be <0.5, and vary greatly from experiment to experiment.

DeleteI believe that p values are very useful in modern biology, especially when used in bulk for FDR calculations, or even in the classical Neyman-Pearson approach. Having said that, there are good reasons to think that the p should at the very least be de-emphasized as a single number heuristic for evidence for (or against) a single hypothesis. The reasons for this are logical, philosophical, and, most importantly, practical. It looks like the p is simply not worth the trouble in this context. In practice, when I help innocent biologists to calculate p values, the reason is always that the referees want it. Meanwhile, the actual effect is either so large that calculating the p value will not add much to our already high confidence, or the effect is not large enough to be really lifted by p=0.04 (a small effect is more likely to be caused by bias, especially when our experimental hypothesis predicts a larger effect). Because the primary concern of working scientists is the experimental hypothesis vs. bias, not the sampling error, the p value does not bring much to the party.

Why does Cohen's formula assumes P(H0) is 0.5? P(H0) is part of the formula - you can fill in any value you want, and Cohen surely doesn't use 0.5 in his 1994 article. I completely agree p-values should be deemphasized. I'm just reacting against people who want to ban them. Especially because I think they are useful to report from a NP perspective. Yes, you could say p < alpha, but as a heuristic, having the exact p-value is not worthless. In lines of studies (I also think single studies should be deemphasized) they are good to report. After the studies, many people will want to evaluate the evidence - Bayesian stats is ok, or just estimate the ES.

DeleteHi Daniel,

ReplyDelete"P(D|H0) is the probability (P) of the data (D), given that the null hypothesis (H0) is true. In Cohen’s approach, this is the p-value of a study." The pvalue is actually the probability of the data... or more extremes data, given that the null hypothesis (H0) is true, isn't it ?

Thanks

Yes, I've fixed it! Thanks

DeleteThis comment has been removed by a blog administrator.

ReplyDeleteYou have your wish: http://www.sciencemag.org/content/349/6251/aac4716

DeleteAs a side note, I find your remark to be rather absurd considering how much of the reproducibility movement is coming from within psychology. Ah, well -- trolls will be trolls.

Hi Patrick - I agree. The comment was a waste of time, so I deleted it. I don't like dismissive anonymous commenters.

DeletePlease note that the formula attributed to Cohen is incorrect. A correct form is PPV = ((1 - P(H0)) * power) / ((1 - P(H0) * power + P(H0) * alpha) where PPV - the positive predictive value - is the ratio of the false H0s over all the rejected H0s, and alpha is the significance level. To see why alpha cannot easily be substituted by exact p values, see section 3 of Hooper (2009) J Clin Epidemiol. Dec;62(12):1242-7. "The Bayesian interpretation of a P-value depends only weakly on statistical power in realistic situations."

ReplyDeleteAlso, the p value cannot be directly used in Bayesian calculations, because while the likelihood P(Data I H0) is about the exact sample data, the p value is about data as extreme or more extreme than the sample data. Thus, any Bayesian use of a single p value (derivation of the posterior probability of the H0 using the p value) is likely to be so much of a hassle as to negate the only positive aspect a single p value has - the ease of calculation.

I would say that prohibiting the evidentiary use of single p values seems like a good idea. Especially, since the theoretical maximum we can suck out from a p value is the probability of sampling error as the cause of an effect - which pretty much ignores the scientific question, whether the experimental effect is strong evidence in favor of ones scientific hypothesis, or in favor of some other, often unspecified, hypothesis (like the presence of bias).

Hi Anonymous (such a useful comment deserves a name!) - The formula comes directly from Cohen, 1994, who uses a precise p-value himself. Now it makes total sense to replace this by 0.05 (the logic is the same as in my PS, so I agree) but it's not what has been done in the literature (Cohen, 1994, Hagen, 1997) nor by Trafimow & Rice, 2009 (see their example in the post). But thanks for the literature reference, I'll definately read it!

DeleteGreat comment by Anon. The second pointer, goes back to the simple fact that p-value is not p(D|H0). P-value is p(D;H0). H0 is not a random variable. As a consequence p(D;H0) is a number while p(D|H0) is a random variable. Now what you Daniel (and Cohen?), do is to take the formula which says p(D|H0) and plug in p(D;H0) instead of p(D|H0). To be clear, it's perfectly valid for a frequentist to investigate p(D|H0) or p(H0|D), you just should not mix p(D;H0) with p(D|H0) in a single formula.

DeleteGeez, Daniel, so much confusion in this blog :)

Re-reading Anon's comment, I also see lot of confusion in my previous comment :) Let me give it some more thought.

DeleteHey - this blog is my sandbox, where I play around. There is a reason this is a blog and not an article - I'm putting it online, and hope to get feedback. Again, I'm following Cohen and Hagen - but my PS shows I disagreed. I'd love to hear other viewpoints, related articles, etc. I'm not clear yet whether it is better to use the exact p, or the alpha level, and why. I'll look into it, but please educate is you already know this. And if this blog is confusing - that's a good thing. I'll not be the only person struggling with the confusion aspects of this, so better to get it out here and discuss it.

DeleteHi,

ReplyDeleteI don't think Cohen actually suggested the formula "p / (p + power)" (or related). It seems so but I think Cohen simply chose a bad example, rightly criticized by Hagen (1997). Still, Cohen did not go onto proposing the formula, Hagen did.

Regarding Hagen, he uses "alpha / (alpha + power)". You substitute "p / (p + power", anew formulation. My question is, which power are you talking about, a priori power or a posteriori power?

Cheers,

(Perezgonzalez, Massey University)

Thanks for your comment. I agree the difference between alpha and p is important, and worthwhile to explore. To be clear, Trafimow & Rice (2009) use p, and that is what I am reproducing and checking. The question whether they should have used alpha is very interesting. We are talking about a-priori power in the formula.

DeleteHi.

DeleteThanks. I think it is more coherent to use alpha when following Neyman-Pearson's approach, and 'p' when following Fisher's approach (but with the latter we then have the problem of what to do with power).

Because Trafimow (et al) criticize NHST, my reasonable guess is that they are using the hybrid model that mixes NP's and F's approaches, so their results may not be too consistent.

I also agree with you that their reasons to ditch NHST are lame, statistically speaking. I would recommend ditching NHST simply because of it been an inconsistent hybrid model (http://dx.doi.org/10.3389/fpsyg.2015.01293).

This comment has been removed by a blog administrator.

ReplyDelete